BentoML Is Joining Modular

Authors

Last Updated

Share

We’re excited to share that BentoML has joined Modular! After months of collaborating on customer deployments together, we’re thrilled to officially become one team, as part of a strategic product acquisition.

This partnership strengthens our ability to support the BentoML project and community. Modular shares our commitment to open source and making AI infrastructure accessible. Together, we’ll be able to push the platform further with capabilities that weren’t possible before.

Why this partnership makes sense#

BentoML’s mission has always been to help teams run AI inference in production without compromising on speed, cost, or control. We’ve built a multi-cloud inference platform that gives teams flexibility over where and how they deploy AI.

Modular is building a unified AI compute stack, from the Mojo programming language to the Max inference engine, that enables high-performance inference across diverse hardware. BentoML brings the cloud layer: the tooling teams rely on to deploy, operate, and scale inference across real-world environments, including multi-cloud and BYOC setups.

Inference systems are complicated. They have many layers, and getting everything right across the stack is hard. With vertically integrated infrastructure, teams can optimize end-to-end and achieve better performance faster.

What this means for you#

For BentoML customers#

Our focus remains the same. We will continue to support production inference across multi-cloud, BYOC, and security-sensitive environments. Your systems will keep running without disruption, with new capabilities coming as we integrate deeper with Modular’s compute stack.

For our open-source community#

BentoML remains Apache 2.0. Our commitment to open source does not change. We’ll continue supporting the workflows and community that have grown around BentoML.

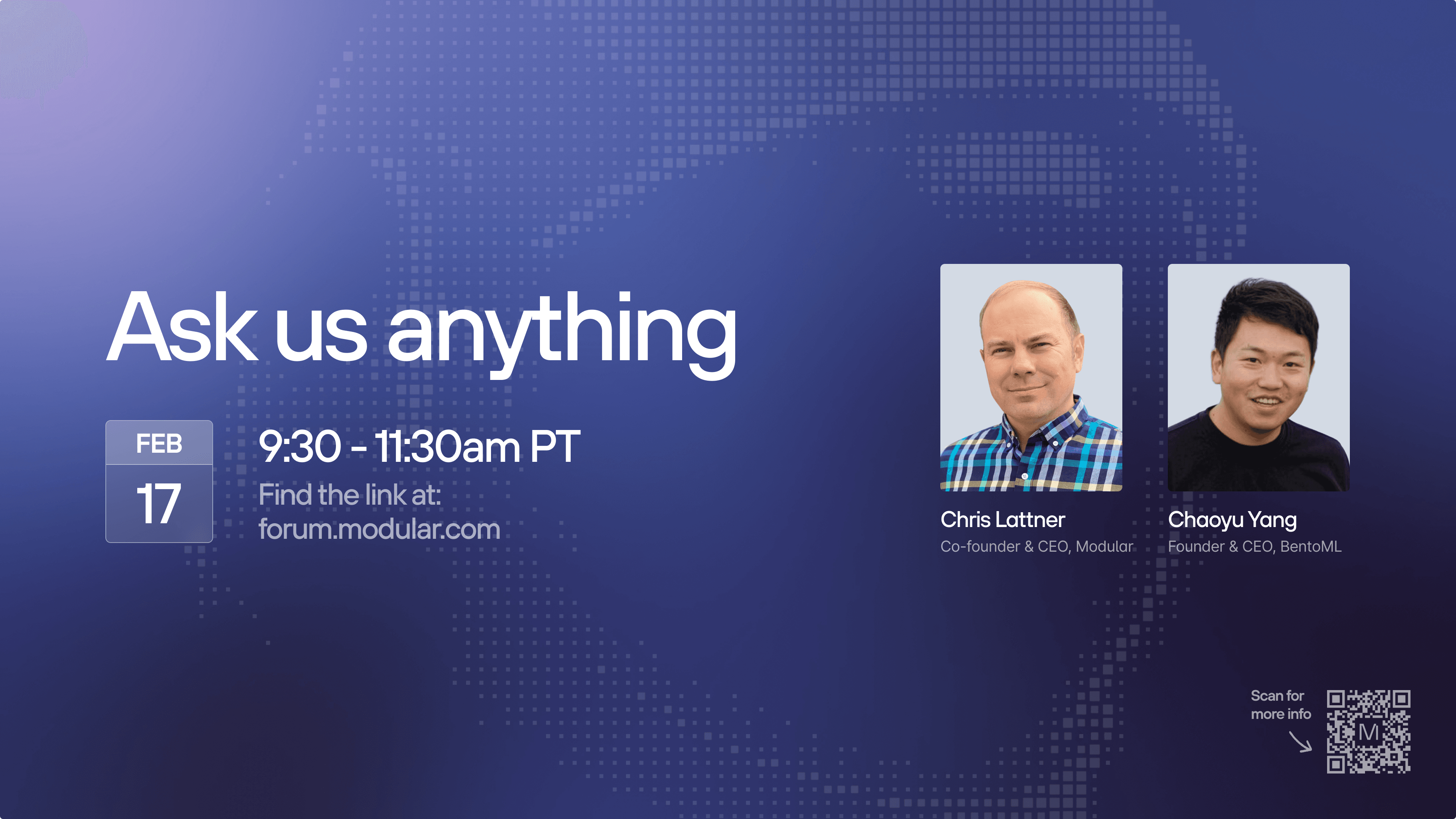

We’re also hosting an AMA with Chris Lattner (Modular CEO) and Chaoyu Yang (BentoML founder) on Tuesday, February 17th from 9:30am-11:30am PT in the Modular Forum, where we'll share more details and answer your questions.

Looking ahead#

Inference is shifting from an operational detail to a strategic capability. Teams that can optimize across the full stack and deploy across hardware and clouds can push performance limits for their workloads, deploy where it makes business sense, and adapt their infrastructure as needs evolve.

Joining Modular allows us to push further in that direction, alongside the customers and community building with us.

If you want to learn more:

- Check out what Modular is building at modular.com.

- We are actively hiring for roles building the Modular Cloud and more.

- Interested in seeing the Modular Stack in action for your workload? Let’s chat!

Thank you for trusting BentoML and helping shape what we build. We’re grateful to our customers, open-source community, investors, and team for making this next chapter possible.