A Guide to Compound AI Systems

Authors

Last Updated

Share

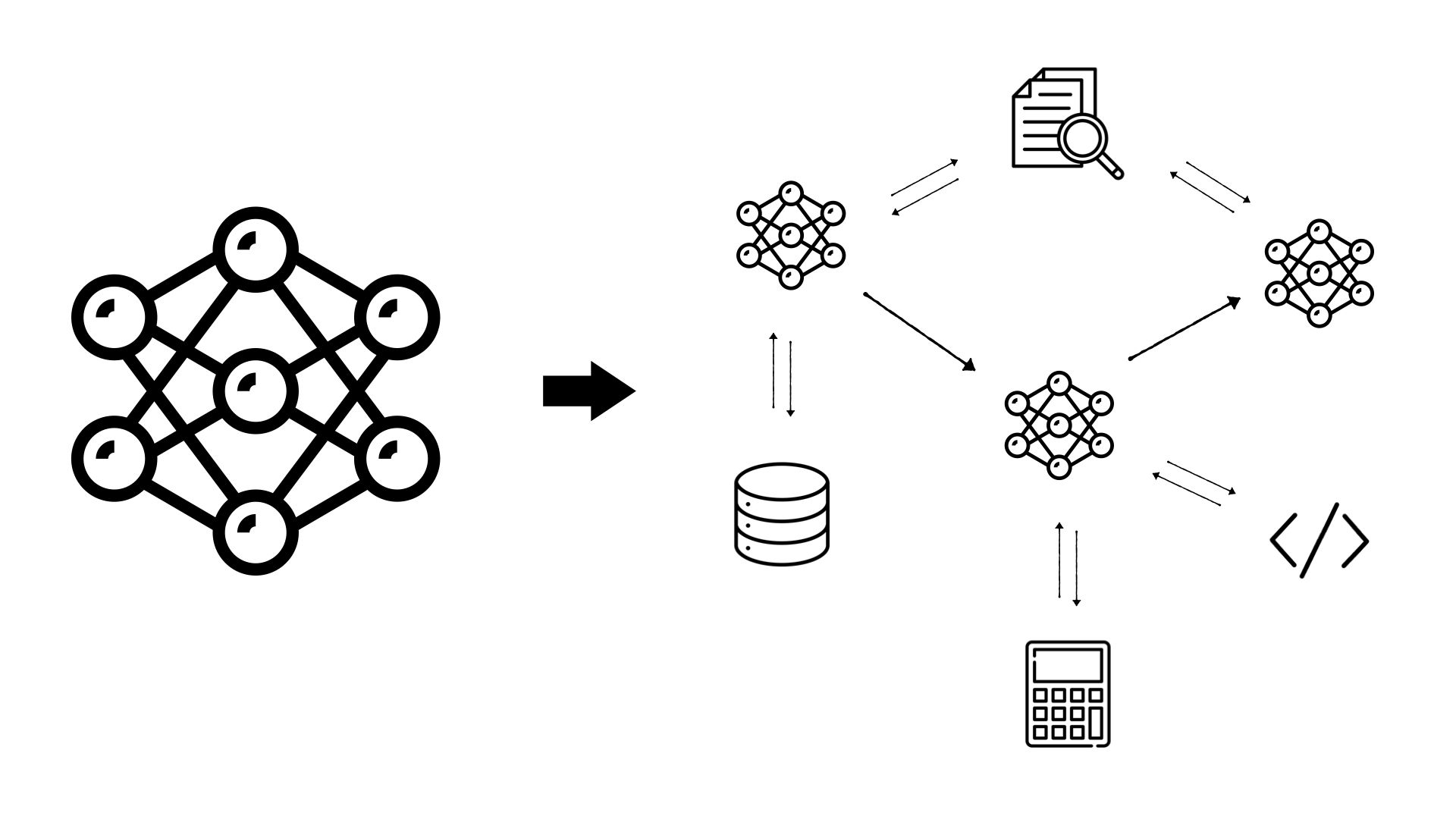

A compound AI system leverages multiple interacting components to tackle some of the most challenging tasks with AI. Common examples include calling a single LLM multiple times, chaining multiple models, and letting models call external tools. This system design is effective as the power of an AI model doesn’t lie solely in its individual capabilities but in how it is utilized within a broader system.

Many of the AI services we use today are powered by compound AI systems, even if it's not immediately apparent:

- ChatGPT uses web search tools to fetch the most current information to overcome the limitations of its training data's knowledge cutoff.

- GitHub Copilot constructs the prompts by strategically navigating the code base to extract relevant code contexts and documentation to provide code completion suggestions.

- Character AI understands and generates voice of natural languages by combining transcription and text-to-speech models with fine-tuned LLMs.

Our community of AI builders is also actively harnessing the power of compound AI systems across a variety of use cases:

- Video analytics: A stream processor that chunks a live video feed into frames and analyzes the frames with vision models.

- LLM safety: A gateway that guards against dangerous content, NSFW, and harassment prompts before forwarding to an LLM.

- Voice assistants: An assistant that classifies actions based on voice commands, extracts function arguments with an LLM, and calls the specific functions to complete the actions.

- Document processors: An indexing pipeline that extracts information and converts unstructured data into embeddings using OCR, semantic chunking, and embedding models, before storing into a vector database.

Why should AI teams care about compound AI systems?#

According to the Berkeley AI Research (BAIR), we are steering towards a future of compound AI systems with four major reasons:

- Certain tasks are easier to improve via system design: Compound systems outperform single models in certain applications by providing better returns on investment.

- Systems can be dynamic: AI models are limited by the scope of their training data. Compound systems can utilize external resources like databases and retrieval systems to access timely data, which makes them more dynamic.

- Improved control and trust: By integrating multiple components, compound systems can provide better control over outputs and behaviors, such as reducing error rates via cross-verification among components.

- Varied performance goals: Unlike individual models, which offer a fixed level of quality at a set cost, compound systems are flexible in balancing cost with quality. This is important for creating custom solutions with specific needs and budgets.

The growing trend towards compound AI systems unlocks new opportunities for AI teams.

- Integrating with proprietary data. Models are trained on general knowledge and are limited by a cutoff date, meaning they lack the latest information about your specific domain. By integrating compound AI systems with information retrieval, AI teams can enable models to answer queries based on specific data.

- Working with your APIs. Compound AI systems can integrate with your existing APIs, enabling them to take actions on your behalf. For example, they can call automated tools to handle routine tasks or complex workflows.

- Building with multiple models. As the open-source model ecosystem thrives, AI teams can leverage a rich array of models that can be combined to tackle sophisticated tasks. This modular approach is cheaper than developing and training bespoke monolithic models for specific tasks.

- Improved privacy, safety and control: Compound AI systems provide better safety and control over sensitive data. AI teams can leverage compound AI systems to detect and prevent leaking of sensitive information to third-party LLMs, safeguarding against dangerous content, harassment, hate speech, and NSFW contents. Furthermore, AI teams can dictate the exact schema or format in which outputs are generated, ensuring the results are consistent with your expectations.

Build and scale compound AI systems#

In an effective compound AI system, multiple components often run on distributed instances, such as GPUs, interacting with each other seamlessly to function as a single unit. Building such a system presents a variety of complex challenges. This is why we built BentoML, the platform for building and scaling compound AI systems.

Building#

A compound AI system may require developing components with different models on distributed instances that support sequential and parallel inference. BentoML supports developing distributed applications holistically as intuitive Python modules. This approach makes it easy to interact with components of the system as if you're just making regular Python function calls, while also ensuring that the I/O is handled efficiently.

Optimizing#

A key to enhancing the performance of compound AI systems is accelerating model inference while making the right trade-offs to optimize performance characteristics, such as balancing latency versus throughput of LLM decoding. BentoML allows you to choose the best inference backend that suits your specific needs.

Scaling#

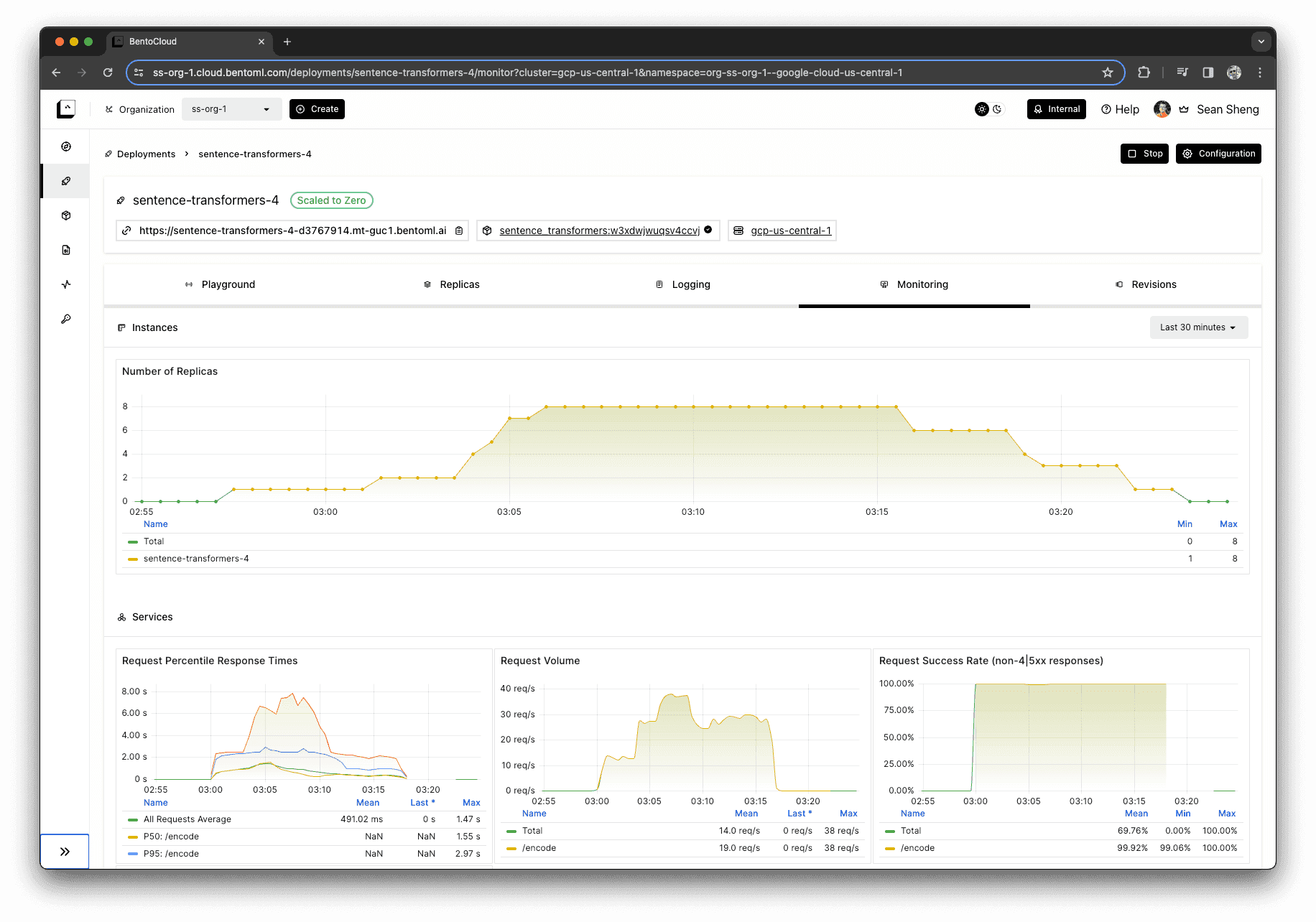

As the demand changes, it's crucial yet challenging to scale the system in production. BentoML supports autoscaling to optimize the number of replicas for each component within the system, and it can scale to zero for idle components to conserve resources and reduce costs.

BentoML not only tackles these challenges but also provides complete observability for all components. It allows developers to build and deploy their compound AI systems to production with full confidence, knowing every aspect of the system is monitored and controlled.

Check out the following resources to learn more:

- [Doc] Model composition

- [Blog] BentoCloud: Fast and Customizable GenAI Inference in Your Cloud

- Sign up for BentoCloud to deploy your first compound AI system

- Join our Slack community

- Contact us if you have any questions about building and scaling compound AI systems